Batch-Insert/Update with Spring Data and JPA

Spring data and JPA are incredibly handy pieces of Java technology, which liberate the developer from the cumbersome task of manually writing SQL code and allow the use of Java POJOs as a programming interface. However, when it comes to batch-operations, you may face some serious performance challenges.

This article will give some insights on how to solve these challenges in an elegant and yet efficient way.

Our Scenario

We have a database with about 1 mio rows distributed over 10 tables. Every week we get a ZIP archive containing new AND updated entries. The number of entries varies, but it is roughly at about 50.000 entries – each entry as an individual file with about 100kb in raw size. One entry in the ZIP archive corresponds to one row in the database where the entire file is stored along with some metadata extracted from the data as additional columns. The link between an entry in the update file and the database is a, possibly composite, domain key. The database access is handled by a Spring Boot application, using Spring Data JPA and Hibernate, with 2nd level cache activated.

The Use Case: Update all entries in the database that already exist and add the new ones. Well, easier said than done!

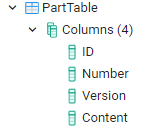

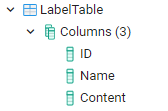

The Database

In our scenario we have a database with about 10 simple tables, each with

- 1 UUID as technical primary key

- One or more columns identifying the entry with an unique key from the domain

- N columns containing arbitrary data representing the business object

Those tables have no payload data columns in common, especially not the domain keys (only the technical primary key). The technical primary key (the UUID) is only known in the context of the system itself. It is generated upon creation of a row in the database and is not included in the update file data in particular. We’ll see in a bit, why that is a challenge.

For the sake of clarity, we will focus on two sample tables. The content-column serves as an arbitrary placeholder for the payload data, in reality distributed over several different columns.

The Model

The corresponding JPA entities are:

@Entity

@Table(name = "PartTable",indexes = @Index(columnList = "Number, Version", unique = true))

@Cacheable

public class PartEntity {

@Id

private String id;

@Column(name = "Number")

private String number;

@Column(name = "Version")

private int version;

@Column(name = "Content")

private String content;

}@Entity

@Table(name = "LabelTable",indexes = @Index(columnList = "Name", unique = true))

@Cacheable

public class LabelEntity {

@Id

private String id;

@Column(name = "Name")

private String name;

@Column(name = "Content")

private String content;

}The Challenges

As mentioned in the beginning, you will face multiple challenges when trying to implement the use case in this scenario. The challenges are:

- We need to check the database for existing entries, in order to decide whether to

UPDATEorINSERT. - We have many entries and a performance requirement, so a naive approach might end up with an unacceptable runtime.

- What happens to our RAM? Remember, we have

50.000 * ~100kb = ~ 4.7Gb. - And last but not least, can all that be done in a nice, generic way without tons of duplicated code? (Spoiler: Yes!)

The Solution

To make the solution more understandable we will solve the different challenges step by step until we have the complete solution.

First Things First: Querying the Database

How do we check the database efficiently for existing entries in Spring Data JPA? As mentioned before, the only link between the import data and the database is a, possibly composite, domain key. So, the naive and the slowest possible way of doing this would be one query per entity. Something like:

SELECT * from PartTable where Number = entity1Number AND Version = entity1Version;If you ever executed 50.000 statements - one after another - you know: that ain’t fun! Adding to the pure database round trip is the fact that with each query another entity must be handled in the Hibernate session. This leads to additional overhead, such as automatic dirty checks before each query. This overhead alone can be a performance killer on its own.

So how can we do better? Ideally, we would like to execute exactly one statement to query all existing entities. Something like:

SELECT * from PartTable where (Number = ?1 AND Version = ?2) OR (Number = ?3 AND Version = ?4) OR (Number = ?5 AND Version = ?6)...;Can this somehow be done with Spring Data JPA? You can use the IN (...) statement, unfortunately that works for single primitive keys and NOT for combined keys, like the ones we have. However, if you only have primitive keys e.g. a single string column, you can simply add the following method to your Spring Data repository.

List<PartEntity> findAllWhereValueIn(final List<String> values);In our case, we need some kind of dynamic queries. The ugly way of doing this would be to fiddle around with concatenating HQL snippets. Something like this:

StringBuilder builder = new StringBuilder("Select * from PartEntity where ");

for (Entity entity : newOrUpdatedEntities){

builder.append("(Number = :");

builder.append(entity.getNumber());

builder.append("AND Version = :");

builder.append(entity.getVersion());

builder.append(") OR ");

}This works, but:

- It ain’t cool!

- We might be prone to HSQL / SQL Injection, or we need a complex parameter handling.

- We break the clean pattern of Spring Data Repository Interfaces, as you need “real” code to execute query.

- Now we are tied to a specific Spring Data implementation (JPA).

Is there a better way?

May I present to you: The QuerydslPredicateExecutor

//package org.springframework.data.querydsl

public interface QuerydslPredicateExecutor<T> {

Optional<T> findOne(Predicate predicate);

Iterable<T> findAll(Predicate predicate);

Iterable<T> findAll(Predicate predicate, Sort sort);

Iterable<T> findAll(Predicate predicate, OrderSpecifier<?>... orders);

Iterable<T> findAll(OrderSpecifier<?>... orders);

Page<T> findAll(Predicate predicate, Pageable pageable);

long count(Predicate predicate);

boolean exists(Predicate predicate);

}But what’s this?

Querydsl is an extensive Java Framework that allows the dynamic (runtime) generation of type-safe queries via a Java API. The QuerydslPredicateExecutor is the connecting piece to seamlessly integrate Querydsl in your Spring Data Repositories. As you can see, we can provide some sort of Predicate (Querydsl) and get an Iterable<T> in return - awesome! But what is a Predicate? A Querydsl Predicate is the base interface for boolean expressions. So basically, any criteria you can define in Querydsl is a Predicate. To use the QuerydslPredicateExecutor we just have to extend the interface in all of our relevant repositories (you might wanna create your own “abstract” base repository for that like I did below).

@NoRepositoryBean // important! otherwise you'll get very weird errors

public interface BatchEnabledRepository<T, ID> extends CrudRepository<T, ID>, QuerydslPredicateExecutor<T> {

//let this be your "base" repository for all entities you see fit.

}Cool, but how do we get such a predicate? Well, this requires a bit of work, which we will not cover in this article, since excellent tutorials on this topic can be found in goodness of the internet. A good starting point is for example here: Intro to Querydsl. In short - we need Q-Classes!

Alternative: The same can be achieved with the Criteria API and JpaSpecificationExecutor. But the QueryDslPredicateExecutor also works for non JPA Repositories. See Advanced Spring Data JPA - Specifications and Querydsl

At this point we would be good to go, but where to put the “predicate creation code”? I decided in good old OOP matter to define a common interface and add a method to every entity class providing us a predicate specific for this entity.

public interface UpdateableEntity {

Predicate getKeyPredicate();

}

@Entity

@Table(name = "PartTable",indexes = @Index(columnList = "Number, Version", unique = true))

@Cacheable

public class PartEntity implements UpdateableEntity {

@Id

private String id;

@Column(name = "Number")

private String number;

@Column(name = "Version")

private int version;

@Column(name = "Content")

private String content;

@Override

public Predicate getKeyPredicate() {

return QPartEntity.partEntity.number.eq(number).and(QPartEntity.partEntity.version.eq(version));

}

}Finally we can query all existing entities from the database with the following code. For the sake of clarity, we have omitted the code that is necessary to read the entries to be imported (the ZIP file in my case). In our case, these entries are then available as detached JPA entities. This is the List<T> elements in the snippet below:

public <T extends UpdateableEntity> void updateOrInsert(List<T> elements, BatchEnabledRepository<T,?> repository) {

final List<Predicate> predicates = elements.stream()

.map(UpdateableEntity::getKeyPredicate)

.collect(Collectors.toList());

final Predicate predicate = com.querydsl.core.types.ExpressionUtils.anyOf(predicates.toArray(new Predicate[0]));

final Iterable<T> alreadySaved = repository.findAll(predicate);

final List<T> alreadySavedAsList = Lists.newArrayList(alreadySaved);

}Additional note: The method does actually not return void in our case but instead an object describing what happened (mainly for logging and user-feedback). So how many objects were updated, inserted and ignored (because they were final).

Great, we now found all existing entities in the database with one query! Now, lets look at how to patch them.

Patch existing Entities with new Content

The next problem we have is: we get e.g. 200 objects back – which import objects are those?

How do we figure out which of the input objects match which of the results found? A simple iteration through all elements and comparison would be highly inefficient again: O(n x m). Therefore, we want to use a lookup-map and for this, we want a key-object for each entity. Overriding hashcode & equals of the entities itself would not be useful, as this would have unwanted side effects with Hibernate / JPA, for example.

Therefore we need a way to create a key for each object. Additionally after we’ve done that we also need to patch the existing entity with the new content.

So let’s do that next. For that we’ll extend our UpdateableEntity interface

public interface UpdateableEntity<T> {

Predicate getKeyPredicate();

Object getKey();

void patch(final T t);

}

@Entity

@Table(name = "PartTable",indexes = @Index(columnList = "Number, Version", unique = true))

@Cacheable

public class PartEntity implements UpdateableEntity<PartEntity> {

@Id

private String id;

@Column(name = "Number")

private String number;

@Column(name = "Version")

private int version;

@Column(name = "Content")

private String content;

@Override

public Predicate getKeyPredicate() {

return QPartEntity.partEntity.number.eq(number).and(QPartEntity.partEntity.version.eq(version));

}

@Override

public Object getKey() {

// This could be any object, as long as hashCode() & equals() behaves as expected.

return new ImmutablePair<>(partNumber, version);

}

@Override

public void patch(final PartEntity entityToMerge) {

content = entityToMerge.content;

// As a reminder - in our real case this are more like a dozen fields

// of course NOT the identifying fields (number and version in this case)

// it wouldn't hurt, but it makes no sense to do it.

// The UUID is set only in "this" object.

}

}Easy! Done! Now let’s extend our logic from above.

public <T extends UpdateableEntity> void updateOrInsert(List<T> elements, BatchEnabledRepository<T,?> repository) {

final List<Predicate> predicates = elements.stream()

.map(UpdateableEntity::getKeyPredicate)

.collect(Collectors.toList());

final Predicate predicate = com.querydsl.core.types.ExpressionUtils.anyOf(predicates.toArray(new Predicate[0]));

final Iterable<T> alreadySaved = repository.findAll(predicate);

final List<T> alreadySavedAsList = Lists.newArrayList(alreadySaved);

//new code

final Map<Object, T> entitiesByKey = alreadySavedAsList.stream()

.collect(Collectors.toMap(T::getKey, Function.identity()));

final List<T> patchedBatchSet = elements.stream()

.map(c -> {

final T savedEntity = entitiesByKey.get(c.getKey());

if (savedEntity != null) {

savedEntity.patch(c);

return savedEntity;

}

return c;

})

.collect(Collectors.toList());

// This list contains now the patched entities from the database

// and the entities from import in case of new ones.

repository.saveAll(patchedBatchSet);

}Oh no! We ran out of RAM!

Available RAM will become a big problem. As mentioned before, 50.000 entries * ~100kb = ~ 4.7Gb. If every entry already existed in the database you will end up with a memory requirement of at least 10 GB of RAM in your request execution. Why is that? This relates to the Session Management in Hibernate / JPA. Hibernate manages a reference to each object that has been touched during session (e.g. retrieved from the database), until the session is completed (the transaction is finished). This allows you to modify entities within a transaction / session and get them persisted without having to worry about saving every entity explicitly. As a consequence, no entity will be garbage collected, as long as the session lives. Additionally, Hibernate stores the original database state of every entity retrieved from the database, in order to support dirty state checking. This is where the doubled memory requirement comes from.

And why do I get StackOverflowExceptions if I have enough memory???

Well, turns out that we cannot create ONE query with 50.000 OR statements. There are limits both by Spring Data and by the DB for query-sizes.

Ok, we kill two birds with one stone! We split our single execution into batches of smaller sizes. Firstly, this solves our query size problem. In my case I choose a batch size of 500 (as 1.000 would already crash with a StackOverflowExceptions). Secondly, it solves our memory problem, if we can ensure that only the objects of batch reside in the memory and everything else can be garbage collected. How to ensure this?

There are multiple ways to tackle this problem.

- You could (and probably should) use

EntityManager.flush()+EntityManager.clear()after each batch to remove the references into the heap (GC will take care of the rest) - You could use individual transactions for each batch

- You could use a

StatelessSession(Hibernate Native)

I ended up using the second approach since the first one did not work for me. I haven’t fully understood why, but for some reason the transaction was still holding a reference to the heap objects (as I could see in JProfiler). The third one falls back to Hibernate native and thereby excludes itself.

As a trade off, with this approach we loose the transactional integrity between the batches. If we set BATCH_SIZE to e.g. 500 and the first 20.000 entities work like a charm but then something goes wrong – we cannot just rollback the transaction, since each batch call will be executed in its own transaction! We will end up with a partially updated database and might have to write our own code to do whatever is required in this case. In my scenario, this is not a real problem.

...

// split all elements in batch-size fragments

// call the method below with the fragments in a loop

// to prevent real "nested" transaction I disabled transaction creation on the outer methods

// (in my case the REST controller) with

@Transactional(propagation = Propagation.NEVER)

...

// Important: The outer and the inner method have to be in different Spring Beans.

// Otherwise, the TransactionalAspect will not work on this method.

@Transactional(propagation = Propagation.REQUIRES_NEW) //creates a new transaction every time

public <T extends UpdateableEntity> void updateOrInsert(List<T> elements, BatchEnabledRepository<T,?> repository) {

...

}Second Level Caching

In my case we have 2nd level caching enabled, which is bad for this use case since the cache will accumulate all objects and again blow the memory up. But this can easily be avoided by turning it off for the current session.

final Session session = entityManager.unwrap(Session.class);

final CacheMode cacheMode = session.getCacheMode();

try {

LOGGER.info("Deactivating 2nd level caching.");

session.setCacheMode(CacheMode.IGNORE);

...

do the work

...

} finally {

LOGGER.info("Reactivating 2nd level caching.");

session.setCacheMode(cacheMode);

// you might wanna clear 2nd level cache here

}More Thoughts on This

In our real world scenario we have an additional requirement: For every row must be determined individually if the value in the database can be overwritten. Some of them are final. To achive that, we added a java.util.Predicate _overwrite_ to the above method to determine if the loaded entity should be overwritten with the new value from the ZIP file.

I personally found this a very hard problem to crack and it brought me quite a few grey hairs but I really like the final solution. Only that I had to use “nested” transactions bugs me, even if that is not a problem in my case, since the system is idempotent.

Collaborate on this?

Unfortunately I could not find the time to put together a working example to put it on github. If you would like to do this I will happily help!